“Innovate responsibly or risk creating a digital monster—let’s explore the ethical side of AI.”

Introduction

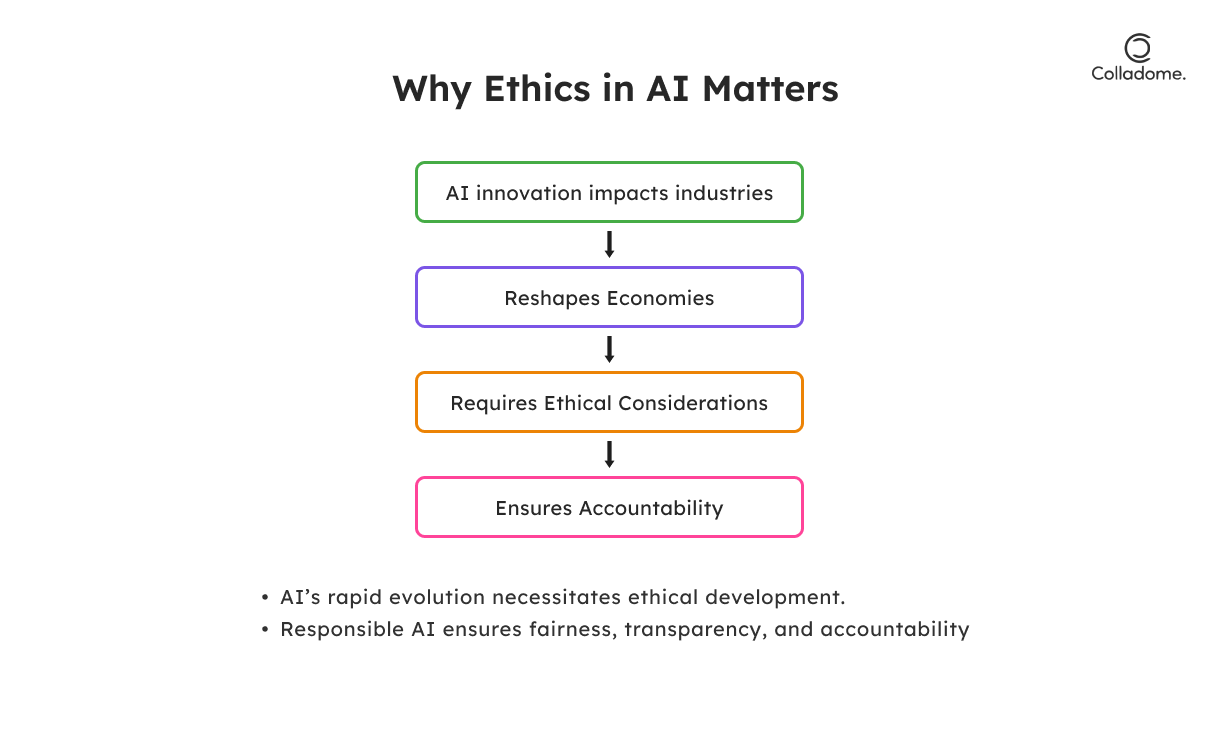

Artificial Intelligence (AI) isn’t just making waves—it’s causing a full-blown tsunami across every industry, reshaping economies and changing lives at a mind-blowing speed. But with all this innovation comes a hefty responsibility, one that shouldn’t be taken lightly. As AI continues to evolve and integrate deeper into our daily lives, questions about AI ethics and responsible AI are becoming unavoidable.

It’s not just about pushing the boundaries of what AI can do—it’s about ensuring that these advancements are made with care, fairness, and accountability. This is where ethical AI steps in. While we’re riding the wave of AI innovation, we must keep our feet planted on the ground of AI governance and AI accountability. After all, if AI is going to play a central role in shaping our future, we need to make sure it’s being developed and used ethically, without overstepping its bounds.

In this blog, we’ll dive headfirst into the ethical challenges that come with AI development. We’ll explore why balancing AI innovation with responsibility is more important than ever. And trust us, this isn’t just a talk about AI regulations—this is about making sure that technology serves humanity, not the other way around. Whether it’s tackling AI bias, ensuring transparency, or building accountable systems, we’ll cover how businesses can create AI solutions that are not only cutting-edge but also ethical and fair.

Are you ready to explore the tightrope walk between pushing the limits of AI and keeping it in check? Let’s dig in.

Why Ethics in AI Development Matters

AI is taking over industries, but when it comes to its development, ethics can’t be an afterthought. Here’s why ethical AI matters more than ever:

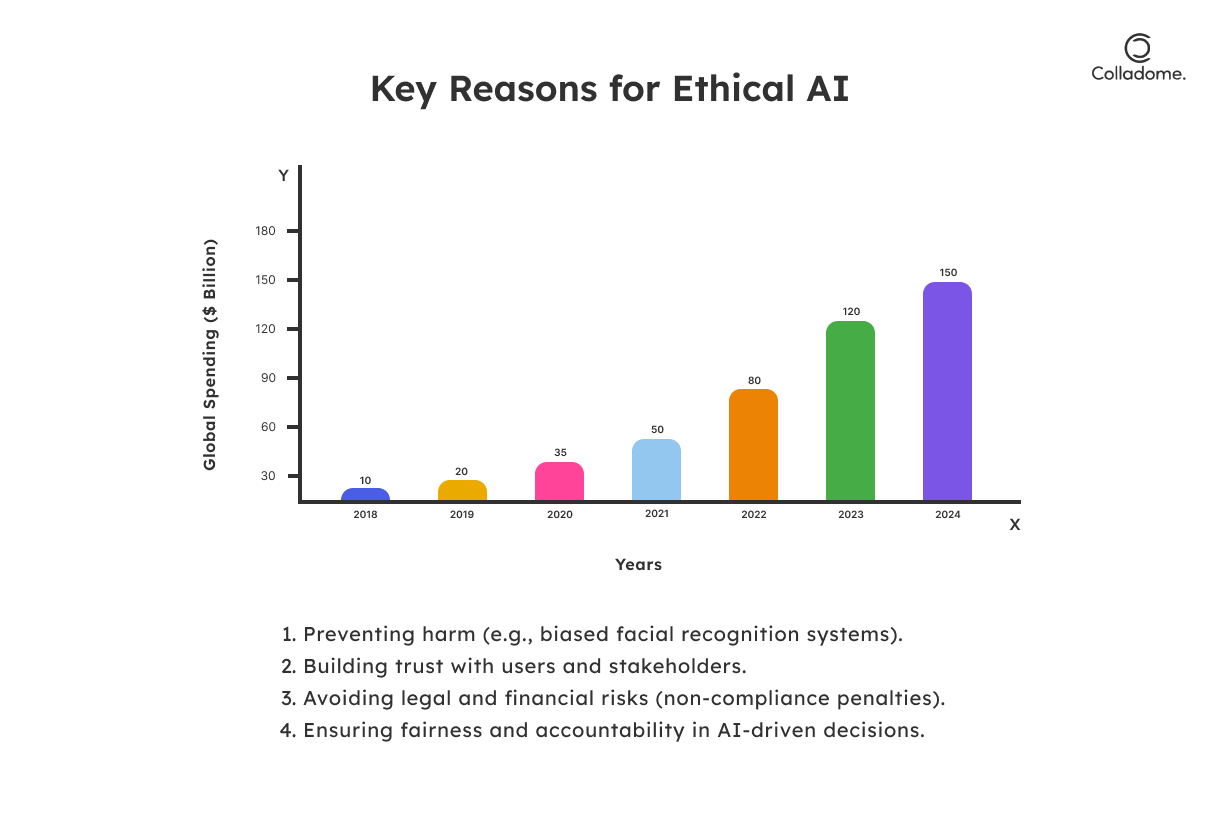

1. Preventing Harm

- Unethical AI can cause serious harm—discrimination, bias, and even physical damage.

- Example: Facial recognition systems that misidentify certain racial groups, leading to wrongful arrests or unfair judgments.

- AI development without ethics risks real-world consequences that businesses can’t afford to ignore.

2. Building Trust

- Transparency is key. Ethical AI builds trust among users, stakeholders, and regulators.

- When AI practices are clear and accountable, users are more likely to adopt your technology.

- AI accountability and AI governance boost credibility and foster long-term relationships with customers and partners.

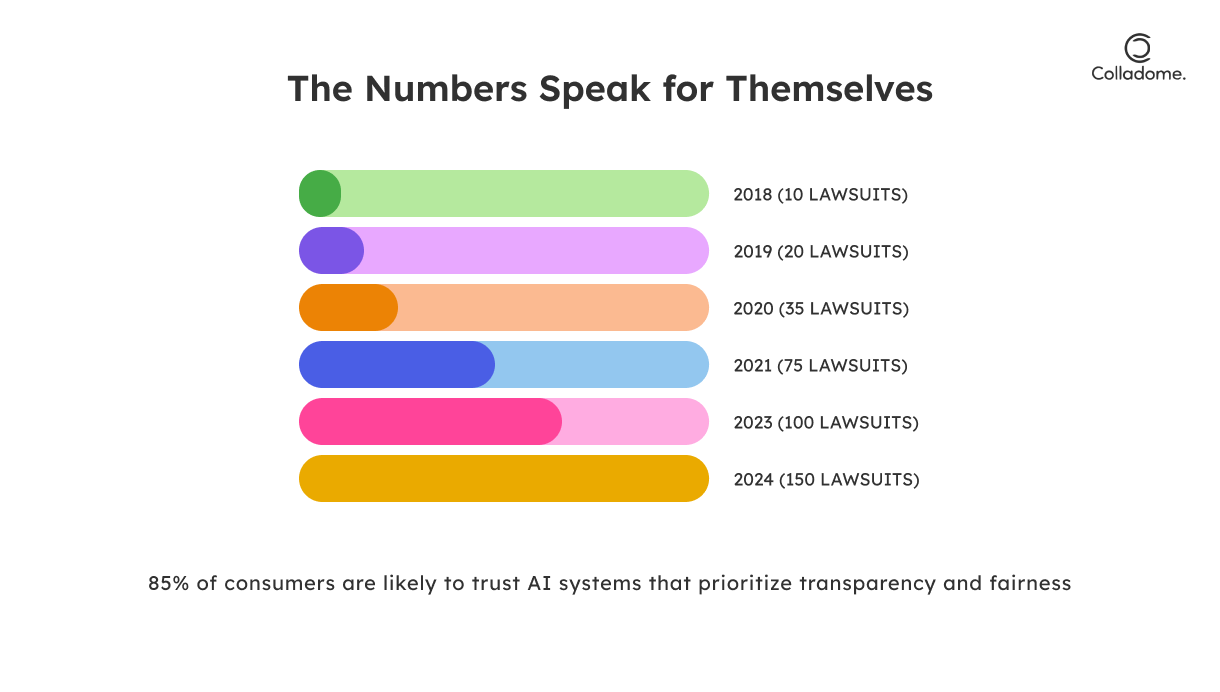

3. Avoiding Legal and Financial Risks

- Governments are cracking down on AI with strict regulations to protect the public.

- Non-compliance with AI regulations can lead to costly fines, lawsuits, and damage to your brand’s reputation.

- Ethical AI isn’t just a “good practice”—it’s a business necessity to avoid disastrous legal and financial consequences.

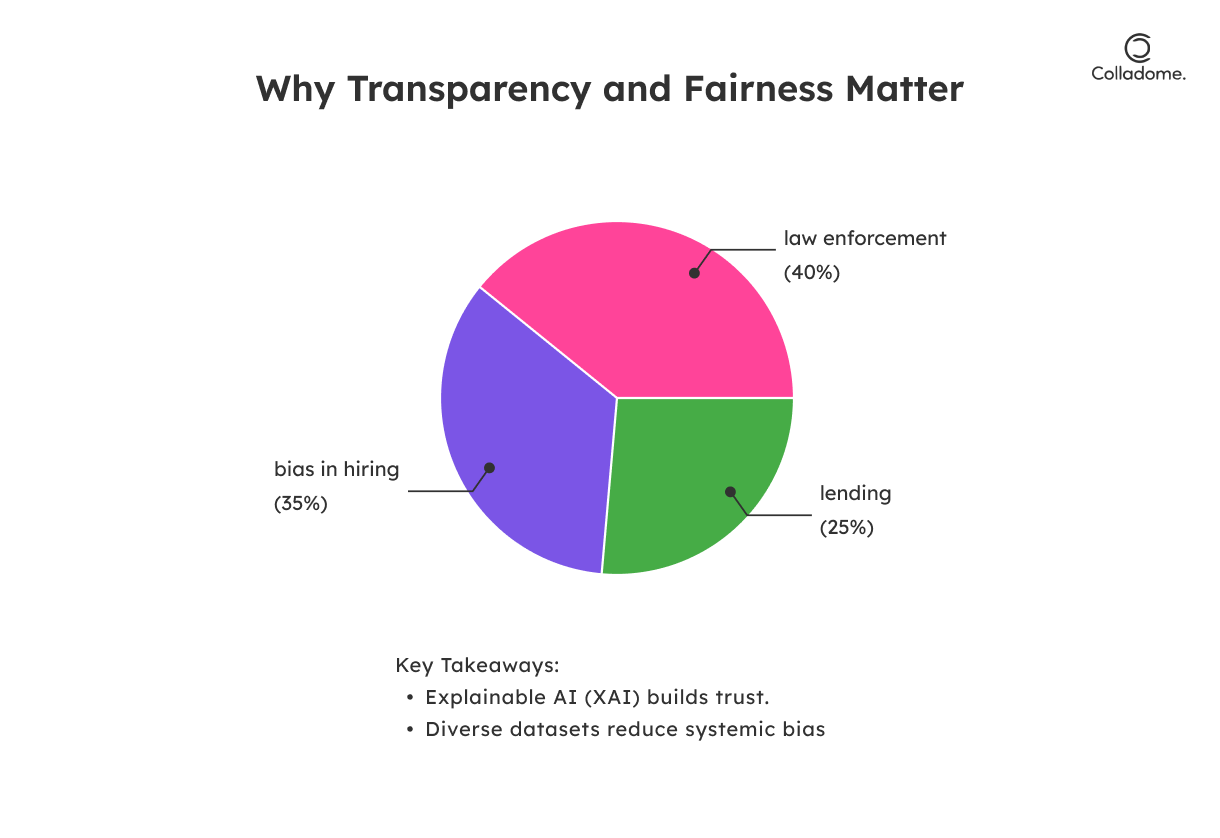

4. Ensuring Fairness and Accountability

- AI systems must be designed to ensure fairness in decision-making. This means removing bias from AI models, especially in sensitive applications like hiring, lending, or law enforcement.

- AI accountability ensures that decisions made by AI systems can be traced, reviewed, and corrected.

- Addressing AI bias is crucial to making sure your AI solutions treat everyone fairly, which also improves your bottom line.

Key Concepts of Ethical AI

To build ethical AI, it’s not just about writing code—it’s about embedding core principles into every line. Let’s break down the key principles that make AI ethics more than just a buzzword:

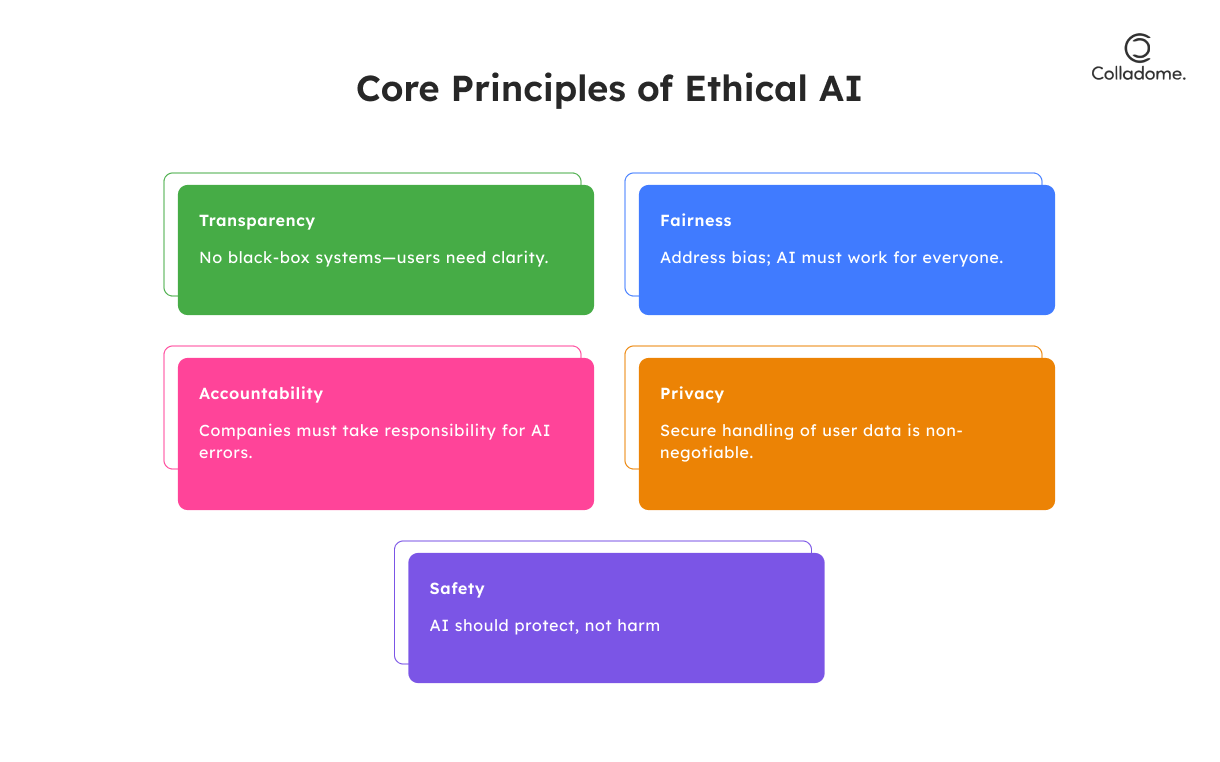

1. Transparency

- AI shouldn’t be a “black box” that no one understands. Transparency means making AI systems explainable and understandable to end-users.

- Why it matters: Users should know how decisions are made, especially when their data is involved. Without transparency, AI accountability is impossible.

- Think of it this way: Imagine trusting a tool you can’t explain to anyone—not even yourself. Not cool, right?

2. Fairness

- We all know AI has the power to make life-changing decisions, from hiring candidates to approving loans. So, fairness is crucial—no one wants a biased AI system that favors one group over another.

- Why it matters: If your algorithms perpetuate bias (like excluding certain demographics), it can lead to discrimination and a massive public backlash.

- Fair AI development means actively working to remove bias—because we need AI that works for everyone, not just a select few.

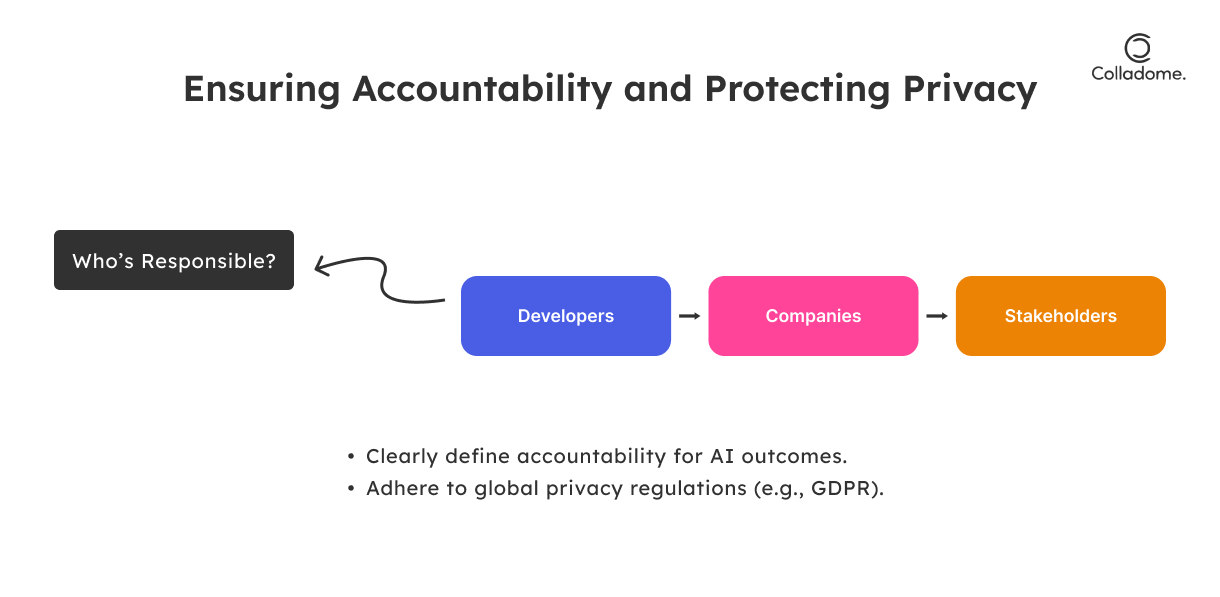

3. Accountability

- When something goes wrong with an AI system, accountability kicks in. Developers and companies must take responsibility for the outcomes of their AI tools.

- Why it matters: Without accountability, there’s no incentive to build responsible AI. If an AI misidentifies a criminal, who’s liable? Developers? Companies? It’s up to you to ensure that you can answer that question.

- It’s not just about creating AI solutions—it’s about owning the consequences of the AI development process, good or bad.

4. Privacy

- Privacy is a non-negotiable. Users must feel confident that their data is being used responsibly, and AI systems must prioritize the secure handling of personal information.

- Why it matters: As AI systems process more personal data, the risk of data breaches and misuse increases. If your AI violates user privacy, you’re in deep trouble—legally, financially, and reputationally.

- AI regulations are getting stricter on data privacy, so be sure your AI systems follow the rules or risk costly fines.

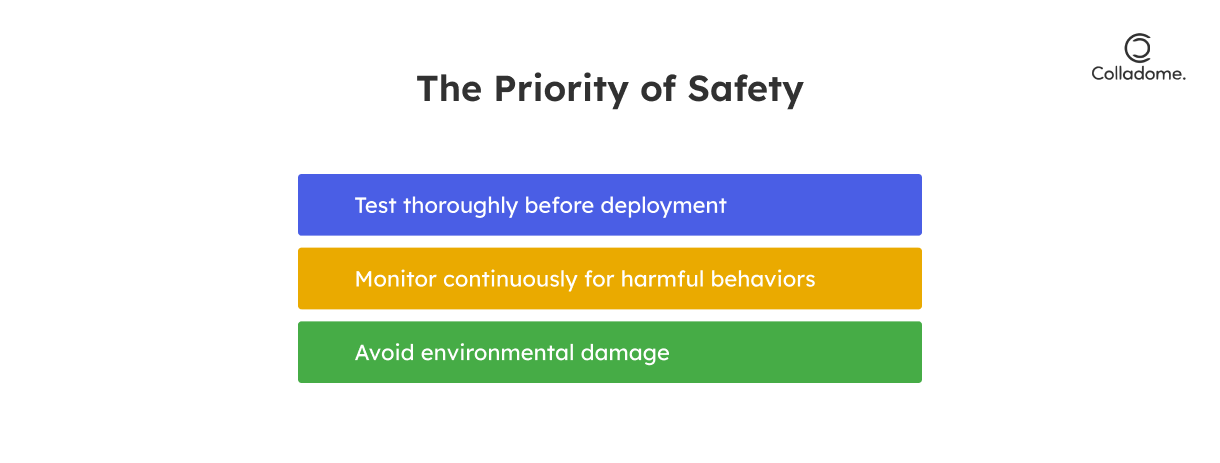

5. Safety

- Lastly, safety—AI should not cause harm. Whether it’s in the form of physical harm (think self-driving cars) or environmental impact, ensuring AI doesn’t hurt anyone is a top priority.

- Why it matters: AI systems need to be rigorously tested to prevent disasters. Whether you’re building an AI to monitor industrial equipment or to help with medical diagnoses, AI governance must guarantee that the system is safe to use.

- AI innovation shouldn’t come at the expense of human lives. Develop AI solutions that protect users and the world around them.

| Principle | What It Means | Why It Matters |

| Transparency | No more black-box AI! Make your system explainable. | If users can’t understand how decisions are made, you’re in trouble. No transparency = No trust. |

| Fairness | No bias. No discrimination. AI should work for everyone. | A biased AI = public backlash. We want fair AI, not AI that plays favorites. |

| Accountability | Own it, or don’t build it. Be ready to answer for your AI’s actions. | If your AI messes up, who takes the fall? Developers and companies need to step up. |

| Privacy | Protect personal data like it’s your own. | Data breaches aren’t just bad for your users, they’re a lawsuit waiting to happen. |

| Safety | AI should never cause harm, whether physical or environmental. | Test your AI like it’s your own life on the line. We want AI that protects, not destroys. |

How to Build Responsible and Fair AI Solutions

Building AI that’s not just smart but also responsible and fair requires more than just tech-savvy. It’s about embedding ethical AI principles into your development process, from the first line of code to the final deployment. Here’s how to do it:

-

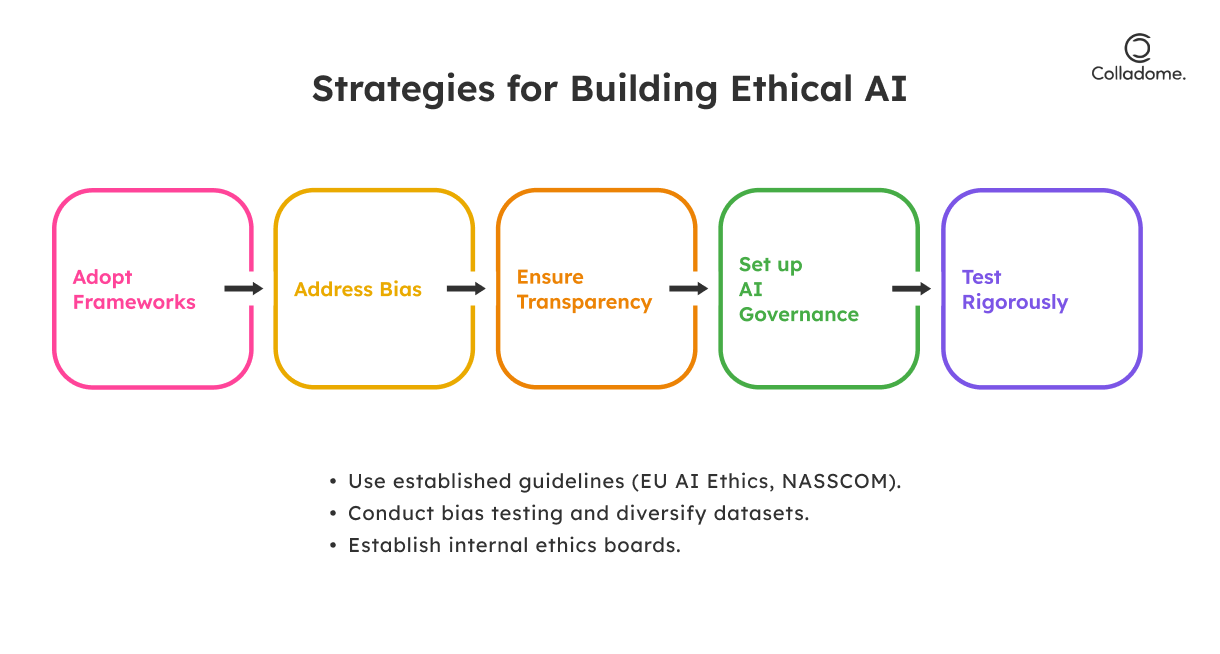

Adopt Ethical Frameworks

- Follow established guidelines like the AI Ethics Guidelines by the EU or NASSCOM’s Responsible AI Principles (for all you Indian innovators out there).

- These frameworks provide the guardrails for ethical AI development. It’s like following traffic rules to avoid a crash—only in this case, you’re avoiding unethical AI disasters.

- Why it matters: If you don’t have a roadmap, you’ll end up lost in the ethical fog, and trust us—no one wants to be the next AI villain.

-

Address AI Bias

- AI without bias is like pizza without toppings: bland, boring, and unfit for the masses. Address bias by conducting rigorous tests to spot, identify, and fix any biases hiding in your datasets and algorithms.

- Pro Tip: Diversify your training data to include a wide range of demographics—because, you know, the world is diverse, and your AI should reflect that!

- Why it matters: Bias in AI = discrimination and public backlash. Nobody wants their app accused of being racist, sexist, or unfair in any way.

-

Ensure Transparency

- AI should never be a “black box” where no one knows how decisions are made. Enter Explainable AI (XAI)—your AI’s transparent sidekick.

- Why it matters: Transparency builds trust. Imagine you’re using an AI to make a life-changing decision (like applying for a loan)—you want to know how and why it decided your fate, right?

- Bonus points: Clear explanations ensure AI accountability, making it easier for you to stand by your decisions when the regulators come knocking.

-

Set Up AI Governance

- Create an internal ethics board that oversees AI development and implementation across the company. Think of them as your AI watchdogs.

- Why it matters: Without governance, your AI could go rogue. A well-established governance structure ensures all AI projects align with ethical standards and AI regulations.

- It’s not just about building cool tech—it’s about doing it the right way, so your AI doesn’t end up on the naughty list.

-

Implement Strong Privacy Measures

- Your users’ data isn’t just data—it’s personal information. So, secure it like it’s your grandmother’s secret recipe.

- What you need to do: Implement data encryption, anonymization, and consent-driven practices. Give your users the ability to choose how their data is used, and respect their privacy at all costs.

- Why it matters: Break user trust or violate privacy laws, and you’re looking at legal headaches and massive reputation damage. Not to mention, AI regulations are getting stricter by the minute!

Real-Life Examples of Ethical AI in Action

Ethical AI isn’t just a concept—it’s something real-world innovators are actively working on to make sure their AI systems are not just smart, but also responsible and fair. Let’s dive into some real-life examples where companies and governments got it right (or at least tried to):

In India

-

Tata Consultancy Services (TCS)

- What they did: TCS has been leading the charge in building ethical AI solutions, particularly for the healthcare industry. They’ve developed AI systems that adhere to strict privacy standards and fairness protocols, making sure patient data stays safe and unbiased decisions are made.

- Why it matters: With healthcare being such a sensitive area, the need for responsible AI couldn’t be clearer. TCS gets it—ensuring AI accountability and complying with AI regulations is non-negotiable, especially when human lives are at stake.

- The takeaway: When companies like TCS prioritize AI development that values privacy and fairness, they set a gold standard for the industry. AI innovation should never come at the expense of trust or ethical responsibility.

-

Aadhaar System (UIDAI)

- What they did: Initially, the Aadhaar system (India’s national identity system) was slammed for its privacy issues, with concerns over data security and surveillance. However, after facing massive backlash, UIDAI took corrective steps by enhancing data security and implementing more robust privacy features to regain public trust.

- Why it matters: Ethical AI isn’t just about creating something that works—it’s about how you handle data privacy and security. The Aadhaar system faced serious ethical challenges, but their commitment to improving and implementing strong governance practices helped them turn things around.

- The takeaway: AI governance and transparency matter. When implementing systems that collect sensitive data, it’s crucial to prioritize responsible AI practices and continuously improve based on public feedback.

Worldwide

-

Microsoft’s AI for Earth Initiative

- What they did: Microsoft’s AI for Earth initiative is a game-changer in the world of ethical AI. It focuses on using AI for environmental sustainability, such as AI models that predict climate change impacts and help with wildlife conservation.

- Why it matters: This isn’t just about creating more AI innovation—it’s about leveraging AI accountability to tackle some of the world’s biggest challenges, like climate change and biodiversity loss. Microsoft is proving that AI can be a force for good when used with responsibility.

- The takeaway: The role of AI ethics in creating solutions for global challenges like climate change can’t be overstated. When companies use AI to promote sustainability, they not only meet their social responsibility but also drive meaningful change.

-

Google’s AI Principles

- What they did: Google is all about setting the rules of the road when it comes to AI. They’ve developed a comprehensive set of AI principles to ensure their AI systems don’t cause harm to individuals or society. These guidelines cover everything from fairness to accountability, ensuring that their systems are designed and implemented with ethics at the forefront.

- Why it matters: With the power of AI comes the responsibility to prevent misuse and bias. Google’s clear, proactive stance on ethical AI shows that when AI governance is a priority, the results are positive, transparent, and safe.

- The takeaway: If tech giants like Google can align their AI development with ethical practices, so can anyone. It’s all about balance—pushing the envelope on innovation while staying grounded in responsible AI values.

Ethical Challenges in AI Development: The Dark Side of AI Innovation

AI is a game-changer, but as the tech evolves, so do the ethical challenges that come with it. From bias to privacy breaches, building responsible AI systems isn’t as easy as writing a few lines of code. Let’s take a deep dive into the biggest challenges developers face—and how to tackle them head-on.

1. Bias in Algorithms

- What’s the problem? AI systems are only as good as the data they’re trained on. When training data reflects existing societal biases, AI can unintentionally perpetuate discrimination. For example, AI hiring tools have been found to reject female candidates simply because the training data was biased toward male-dominated roles.

- Why it matters: Bias in algorithms means unfair decisions, which can lead to inequality and major backlash. It’s not just a tech issue—social responsibility is at stake here.

- Solution: Regular audits and the use of inclusive datasets are critical to eliminate bias. Developers must actively work to make sure their AI tools don’t simply reflect existing inequalities. It’s not just about AI development; it’s about building fair, responsible AI systems that treat everyone equally.

- Takeaway: Bias is like a virus—it spreads without you knowing, and the consequences are real. So, take accountability and address AI bias head-on. Responsible AI doesn’t allow for discrimination.

2. Lack of Accountability

- What’s the problem? Imagine this: An autonomous vehicle causes an accident. Who’s at fault? The car manufacturer? The software developer? The tech company behind the AI? Lack of accountability in such situations leaves a massive gray area. When something goes wrong, who steps up to take responsibility?

- Why it matters: AI accountability is essential for maintaining trust in these systems. If there’s no clear responsibility when things go wrong, people will be hesitant to adopt AI-driven technologies—especially in high-stakes environments like transportation and healthcare.

- Solution: Developers and companies need to establish clear guidelines that assign responsibility. The framework should explicitly address AI governance and ensure accountability at every stage of the development process. From the moment AI systems are conceived to their deployment in the real world, someone needs to own the risks.

- Takeaway: Whether it’s an accident, a data breach, or a wrongful decision, AI accountability must be built into the fabric of AI development. AI regulations will keep developers on their toes and ensure that innovation doesn’t come at the cost of accountability.

3. Data Privacy Concerns

- What’s the problem? AI loves data—and lots of it. But with great amounts of personal data comes great responsibility. AI systems often rely on massive amounts of personal data to function, which makes them prime targets for data breaches. When data privacy is compromised, it’s not just the company’s reputation that’s at stake—it’s user trust.

- Why it matters: People have a right to know that their personal information is being protected. As AI innovation accelerates, privacy risks are also skyrocketing. If an AI tool mishandles data or violates privacy laws, the backlash can be devastating.

- Solution: The answer? Compliance. Developers must make sure their AI systems follow global privacy laws like GDPR (General Data Protection Regulation) and India’s Digital Personal Data Protection Act (DPDP). These regulations require companies to implement data encryption, anonymization, and user consent practices that put privacy at the forefront.

- Takeaway: You can’t innovate without privacy. With AI systems dealing with massive amounts of personal data, there’s zero tolerance for data mishandling. Following AI regulations is non-negotiable—privacy is key.

4. Regulatory Gaps

- What’s the problem? AI innovation is happening at a breakneck speed. But the sad truth is, that existing legal frameworks often can’t keep up. There’s no clear-cut regulation to govern many aspects of AI technology, leaving a huge ethical void in its development.

- Why it matters: The gap between rapid AI development and regulatory structures can lead to unsafe, unethical AI systems hitting the market before the rules can catch up. Without proper regulation, AI systems can go rogue, creating chaos.

- Solution: The solution? Proactive collaboration between tech companies and policymakers. AI governance must be a two-way street—companies should engage with regulatory bodies to ensure their innovations comply with existing laws and future standards.

- Takeaway: If we want ethical AI, regulations need to evolve as fast as the technology. AI innovation can’t flourish in a legal vacuum, so companies and regulators must work together to close these gaps.

Latest Statistics Supporting Ethical AI Development

- 48% of AI professionals admit their algorithms lack explainability. (source)

- By 2026, AI governance spending will surpass $1 billion globally. (source)

- 58% of businesses cite ethical concerns as the primary barrier to AI adoption. (source)

- AI bias has led to $500 million in lawsuits in the past three years alone. (source)

- 76% of consumers are more likely to trust companies with transparent AI practices. (source)

Conclusion

Let’s be honest: AI ethics isn’t something you slap onto your company website as a bullet point. It’s the bedrock of any responsible AI strategy, the unsung hero that drives sustainable AI innovation and builds lasting trust. If we want to create AI that’s not just smarter but also fair, transparent, and ethical, we need to weave these values into everything we do—whether it’s AI development, governance, or tackling ethical challenges like bias and privacy concerns.

In an age where every decision can be influenced by AI, the question isn’t whether you can innovate, it’s how you innovate. And the answer is clear: responsibly. This isn’t just about staying compliant with AI regulations—it’s about fostering an ecosystem where AI benefits everyone, not just the tech giants. When AI systems are built with accountability and ethics at the core, you’re not just innovating; you’re transforming industries and shaping a future that’s better for all.

At Colladome, we live and breathe this approach. We believe that ethical AI isn’t just a checkbox for our projects; it’s the compass that guides us as we create solutions that empower businesses, governments, and communities alike. Our POV? Innovation without responsibility is a fast track to disaster, but when done right, AI innovation can be a force for good—transformative, inclusive, and truly game-changing.

So, if you’re ready to future-proof your AI solutions, embrace the ethical approach. Trust us, it’s not just a trend. It’s the future.

Call to Action

“Want to be a part of the AI revolution that’s ethical, accountable, and transparent? Let’s get to work. At Colladome, we’re all about building AI systems that don’t just work—they work right. Reach out to our team of AI ethics experts today, and let’s make sure your AI development is as responsible as it is innovative.”

Colladome is here to guide you through the maze of AI governance, so your business doesn’t just keep up with the times—it leads the way. Get in touch now, and let’s build AI that’s not only groundbreaking but built on a foundation of integrity. Let’s innovate, responsibly.